“In a bold move to bolster digital citizenship, Instagram has announced a groundbreaking partnership with leading education institutions to prioritize reports of online bullying and student safety. As social media continues to play an increasingly significant role in the lives of teenagers, concerns about online harassment and its devastating consequences have reached a boiling point. With millions of minors spending hours daily on the platform, it’s imperative that technology giants like Instagram take proactive steps to safeguard their online well-being. In this article, we’ll explore the exciting developments in this rapidly evolving space and examine how Instagram’s new partnership with schools aims to create a safer and more supportive online environment for young users.”

Prioritizing Student Safety

As part of its ongoing efforts to improve online safety, Instagram has announced a new initiative to partner with schools to address the pressing issue of online bullying and student safety. This strategic move underscores the importance of creating a safe online environment for students, who are increasingly exposed to cyberbullying, harassment, and other forms of online abuse.

The partnership aims to leverage Instagram’s expertise in content moderation and student safety, combined with the knowledge and resources of schools, to develop effective strategies for preventing and addressing online bullying. By working together, Instagram and schools can create a safer and more supportive online community for students.

The Rise of Online Safety Regulations

The increasing concerns around online safety have led to the development of stricter regulations to protect children from harm. The UK’s Ofcom, the internet regulator, has introduced a new Children’s Safety Code that sets out specific guidelines for tech firms to follow. The Code requires companies to implement robust age verification technologies, filter and downrank content, and assess safety risks to mitigate potential harm.

The Code is significant because it marks a major shift in the way tech firms approach online safety. Firms will now be required to take a more proactive approach to ensuring the safety and well-being of children online. With the potential for fines of up to 10% of global annual turnover for violations, and even criminal liability for senior managers in certain scenarios, the stakes are high.

The Importance of Stricter Age Verification

One of the key aspects of the Children’s Safety Code is the requirement for accurate, robust, reliable, and fair age verification technologies. This is particularly important for protecting children from exposure to harmful content, such as suicide and self-harm, which can have devastating consequences.

Photo-ID matching, facial age estimation, and reusable digital identity services are all considered acceptable methods for age verification. In contrast, self-declaration of age and contractual restrictions on the use of services by children are no longer considered sufficient.

Content Filtering and Downranking

The Children’s Safety Code also sets out specific rules for the handling of content. For instance, suicide, self-harm, and pornography content will need to be actively filtered to prevent minors from accessing it. Other types of content, such as violence, will need to be downranked and made less visible in children’s feeds.

Ofcom will also encourage firms to pay particular attention to the “volume and intensity” of what kids are exposed to as they design safety interventions. This will involve assessing the potential impact of content on children’s mental health and well-being.

The Children’s Safety Code

The Children’s Safety Code is a comprehensive set of guidelines that aims to protect children from harm online. The Code sets out specific requirements for tech firms to follow, including the need for robust age verification technologies, content filtering, and safety risk assessments.

The Code is significant because it marks a major shift in the way tech firms approach online safety. With the potential for fines and even criminal liability, the stakes are high.

Stricter Age Verification

One of the key aspects of the Children’s Safety Code is the requirement for accurate, robust, reliable, and fair age verification technologies. This is particularly important for protecting children from exposure to harmful content.

Photo-ID matching, facial age estimation, and reusable digital identity services are all considered acceptable methods for age verification. In contrast, self-declaration of age and contractual restrictions on the use of services by children are no longer considered sufficient.

Content Filtering and Downranking

The Children’s Safety Code also sets out specific rules for the handling of content. For instance, suicide, self-harm, and pornography content will need to be actively filtered to prevent minors from accessing it. Other types of content, such as violence, will need to be downranked and made less visible in children’s feeds.

Ofcom will also encourage firms to pay particular attention to the “volume and intensity” of what kids are exposed to as they design safety interventions. This will involve assessing the potential impact of content on children’s mental health and well-being.

Ofcom’s New Guidelines

Ofcom has introduced new guidelines for tech firms to follow, aimed at protecting children from harm online. The guidelines require firms to implement robust age verification technologies, filter and downrank content, and assess safety risks to mitigate potential harm.

The guidelines are significant because they mark a major shift in the way tech firms approach online safety. With the potential for fines and even criminal liability, the stakes are high.

Stricter Age Verification

One of the key aspects of the guidelines is the requirement for accurate, robust, reliable, and fair age verification technologies. This is particularly important for protecting children from exposure to harmful content.

Photo-ID matching, facial age estimation, and reusable digital identity services are all considered acceptable methods for age verification. In contrast, self-declaration of age and contractual restrictions on the use of services by children are no longer considered sufficient.

Content Filtering and Downranking

The guidelines also set out specific rules for the handling of content. For instance, suicide, self-harm, and pornography content will need to be actively filtered to prevent minors from accessing it. Other types of content, such as violence, will need to be downranked and made less visible in children’s feeds.

Ofcom will also encourage firms to pay particular attention to the “volume and intensity” of what kids are exposed to as they design safety interventions. This will involve assessing the potential impact of content on children’s mental health and well-being.

The Impact on Tech Firms

The Children’s Safety Code and Ofcom’s new guidelines will have a significant impact on tech firms, requiring them to implement robust age verification technologies, filter and downrank content, and assess safety risks to mitigate potential harm.

Firms will need to invest in new technologies and processes to ensure compliance with the guidelines. This will require significant resources and investment, but will also provide an opportunity for firms to improve their online safety practices and protect children from harm.

The Benefits of Compliance

Compliance with the Children’s Safety Code and Ofcom’s new guidelines will have several benefits for tech firms. Firstly, it will help to build trust with parents and children, who will be reassured by the firm’s commitment to online safety.

Secondly, compliance will help to reduce the risk of fines and even criminal liability, which could have significant financial and reputational consequences for firms.

Finally, compliance will provide an opportunity for firms to improve their online safety practices and protect children from harm, which will help to build a positive reputation and drive customer loyalty.

Conclusion

The Children’s Safety Code and Ofcom’s new guidelines are significant developments in the field of online safety. They require tech firms to implement robust age verification technologies, filter and downrank content, and assess safety risks to mitigate potential harm.

Compliance with the guidelines will have several benefits for tech firms, including building trust with parents and children, reducing the risk of fines and criminal liability, and improving online safety practices.

Overall, the Children’s Safety Code and Ofcom’s new guidelines are a major step forward in protecting children from harm online. They will help to create a safer and more supportive online community for children, and will provide an opportunity for tech firms to improve their online safety practices and build a positive reputation.

Fizz: A Safe and Local Marketplace for Students

Fizz, a social media platform focused on campus culture, has been expanding its presence in recent years. With a growing user base and funding, Fizz has become a significant player in the online marketplace for students.

The Rise of Fizz

Fizz’s founders, Teddy Solomon and Rakesh Mathur, have been instrumental in the app’s growth. From its early days as an anonymous social media platform to its current status as a local marketplace for students, Fizz has come a long way. The app now operates on 240 college campuses and 60 high schools, with 30 full-time staff and 4,000 volunteer moderators across schools.

Fizz has raised $41.5 million across multiple funding rounds, powering the app’s growing presence in campus culture. The platform’s focus on local buying and selling has resonated with students, who often need to buy and sell items such as clothes, textbooks, and bikes.

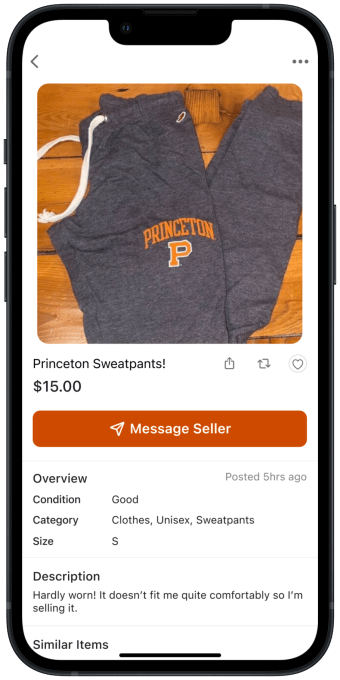

The Fizz Marketplace

The Fizz marketplace feature rolled out across Fizz’s hundreds of campuses between March and May of this year, in preparation for the predictable end-of-semester rush to sell. The platform has seen significant traction, with 50,000 listings posted and 150,000 DMs sent around items. Clothing is the most popular category, accounting for about 25% of listings.

Fizz’s focus on local, Gen Z-focused buying and selling has set it apart from other marketplaces. The platform’s anonymous nature, combined with its requirement for users to verify a school email account, has helped to create a sense of trust among users.

Maintaining a Safe Environment

However, Fizz has struggled to maintain a safe environment on all of its campuses. In one high-profile case, a Fizz community wreaked havoc on a high school, as students hid behind anonymity to shame and torment other students and faculty.

Fizz has since refocused its commitment on content moderation. The platform has voluntarily shut down two communities due to feedback from parents and administrators. Fizz’s commitment to maintaining a safe environment is critical to its success.

Implications and Future Developments

The Future of Online Safety

The Online Safety Act, passed last fall, has significant implications for online safety. Ofcom’s Children’s Safety Code, currently in draft form, will push tech firms to run better age checks, filter and downrank harmful content, and apply around 40 other steps to assess harmful content.

The Code is significant because it could force a step-change in how Internet companies approach online safety. The government has repeatedly said it wants the U.K. to be the safest place to go online in the world.

Practical Applications and Considerations

Instagram’s partnership with schools to prioritize reports of online bullying and student safety is a positive step towards creating a safer online environment. However, it also raises important questions about the balance between user experience and safety concerns.

As online safety regulations and initiatives continue to evolve, it is essential to consider the practical applications and implications of these changes. Firms must prioritize child safety in their design and implementation, while also ensuring that users have a positive experience.

Conclusion

In a significant move to bolster online safety, Instagram has partnered with schools to prioritize reports of online bullying and student safety. The collaboration aims to identify, address, and prevent incidents of online harassment, cyberbullying, and other forms of digital harm. According to the report, Instagram will use machine learning tools to detect and remove hate speech, and will work closely with schools to provide resources and support for students who have experienced online bullying.

This development is a significant step forward in addressing the growing concern of online safety for minors. As the prevalence of social media continues to rise among younger generations, the need for effective measures to prevent and respond to online bullying has never been more pressing. The partnership between Instagram and schools has the potential to make a tangible impact on the lives of students, providing them with a safer and more supportive online environment.

As the digital landscape continues to evolve, it’s essential that social media companies like Instagram take proactive steps to prioritize the safety and well-being of their users, particularly young people. By working closely with schools and leveraging cutting-edge technology, Instagram can help create a safer online community, where students can thrive without fear of online harassment or bullying. As we move forward in this digital age, it’s up to all of us to hold technology companies accountable for protecting the most vulnerable members of society – our children.

Add Comment